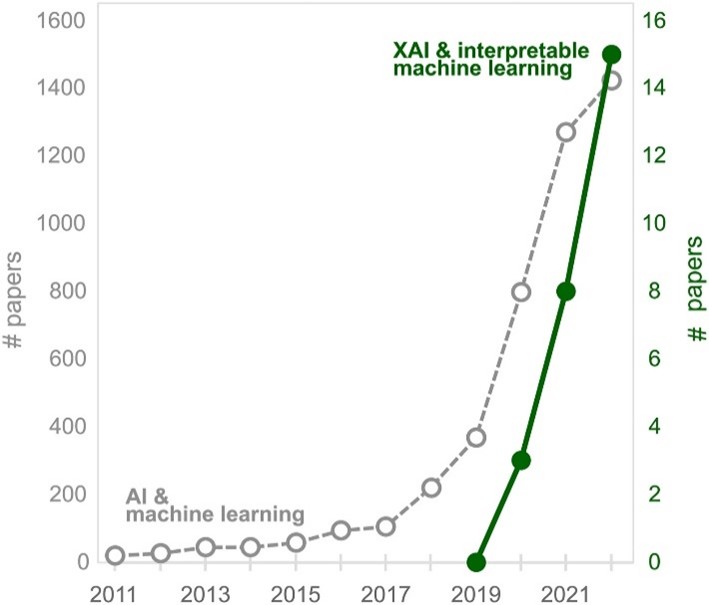

The domain of agriculture faces many challenges such as disease and pest infestation, suboptimal soil management, inadequate drainage, and irrigation, and many more. These challenges result in significant crop losses and environmental hazards, primarily due to the excessive use of chemicals. Several research have been conducted to address these issues. The field of Artificial Intelligence with its rigorous learning capabilities has become a key technique for solving different agriculture related problems. (2) Systems are being developed to assist – agricultural experts for better solutions throughout the world. (3) Among these systems, it is widely recognised that Artificial Intelligence (AI) techniques will play a key role in the immediate foreseeable future. In parallel, also the introduction of algorithms able to explain AI algorithms (also known as eXplainable AI or XAI) is deemed necessary. An interesting analysis on this subject was done by Masahiro Ryo (4) from whose paper the following diagram is taken, illustrating the AI/XAI usage trend.

The importance of adopting an explainable AI approach is also critical when the end users are farmers or agricultural companies. By providing an explanation of the AI’s decision-making process, farmers can gain better understanding of its functioning, increase their level of trust in the technology, and make informed decisions about how to optimize their operations. This can result in significant cost savings, as farmers can target their resources on the areas that will yield the greatest benefits.

Man and Machine Gap

In the past decade, we have witnessed a massive development in Machine Learning and related fields. Due to the increased availability of big datasets and generally more accessible powerful computing resources Deep Learning techniques experienced a boost that revitalized Machine Learning. The resulting super-human performance of AI technologies in solving complex problems has made AI extremely popular. As a matter of fact, however, this increase in performance has been achieved using increasingly complex models that are not interpretable by humans (Black Box AI). Furthermore, in specific contexts closely tied to human experiences, the utilization of AI algorithms is still viewed as risky and lacking in trustworthiness. For example, in healthcare AI applications that support clinicians’ decisions by suggesting a specific treatment for a patient based on predictions of complications. Or, in banking field, AI mechanisms that suggest whether a customer who has applied for a loan is creditworthy or not. In these contexts, it is essential that decision-makers have access to information that can explain why a patient risks a specific complication or why an applicant should not get a loan. But not only that: a mechanical maintenance operator will for instance be able to make little use of information about a future system failure if he or she does not also have indications of which component is beginning to degrade, just as a farmer should know in detail which s suboptimal management will cause a predicted crop failure so that he or she can take informed and targeted action.

The XAI bridge

Contributing to these results, XAI technology has grown exponentially in the AI domain, dealing with the development of algorithms able to explain AI algorithms, thus closing the gaps. Its relevance has become evident not only in academia, but also in industry and institutions. The European Union in the AI Act (1) has explicitly declared the ‘interpretability’ of AI to be a fundamental characteristic for all AI systems, and indispensable for those AI solutions deemed to be high-risk, to be used in European soil.

Explainable AI, also known as Interpretable AI, or Explainable Machine Learning (XML), either refers to an AI system over which it is possible for humans to maintain oversight, or to the methods to achieve this.

Main XAI techniques

In AI, Machine learning (ML) algorithms can be categorized as white-box or black-box. White-box models, sometimes called glass box models, provide results that are understandable to experts in the domain. Black-box models, on the other hand, are any AI systems whose inputs and operations aren’t visible to the user, or another interested party. The goal of the XAI is to ‘open up’ black boxes and make them as like a white box as possible. XAI algorithms follow the two principles of:

- Transparency: when the processes of extracting features from the data and generating labels can be described and motivated by the designer.

- Interpretability: possibility of comprehending the ML model behaviour and presenting the underlying basis for decision-making in a human-understandable way.

If such algorithms fulfil these principles, they provide a basis for justifying decisions, tracking them and thereby verifying them, improving the algorithms, and exploring new facts.

Over the years, numerous methods have been introduced to describe the operation of more ‘Black-Box’ AI algorithms, for example Deep Neural Networks. These methods are characterized along two axes:

- Local and global methods: as the name suggests, these are methods used to explain different aspects of the AI model behaviour. Local explanations explain single model decisions, while global explanations characterize the general behaviour of a model (e.g., a neuron, a layer, an entire network). In some cases, global explanation is derived from local explanations, but this is not necessarily true for all artificial intelligence models.

- Post-hoc and Ante-hoc Methods: Post-hoc “after this event” methods are those methods that provide the explanation after the model has been trained with a standard training procedure; examples of such methods are LIME, BETA, LRP. Ante-hoc methods are those that are interpreted immanently in the system, i.e., they are transparent by nature in the sense that these methods introduce a new network architecture that produces an explanation as part of its decision.

There have been several attempts to explain the prediction of the AI models. The most active among them has been on the problem of feature attribution. The feature attribution aims to explain which part of the input is most responsible for the output of the model. For example, in Computer Vision tasks detecting plant diseases, heatmaps can show the region of the most damaged leaf area of the image that affects mostly the output. Other techniques include feature visualization, interpretability by design.

These studies have produced many tools and frameworks for developing XAI systems. A short view of them could be:

- LIME: Uses interpretable feature space and local approximation with sparse K-LASSO.

- SHAP: Additive method; uses Shapley values (game theory) unifies Deep LIFT, LRP, LIME.

- Anchors: Model agnostic and rule based, sparse, with interactions.

- Graph LIME: Interpretable model for graph networks from N-hop neighbourhood.

- XGNN: Post-hoc global-level explanations for graph neural networks.

- Shap Flow: Use graph-like dependency structure between variables

XAI in AgriDataValue Project

As part of the AgriDataValue project, which aims to drive digital transformation in agriculture at the European level, a specialized module is being designed and created to increase trust and confidence of end-users in this technological ecosystem that will make massive use of AI.

The Human Explainable AI Conceptual Framework Component will fully integrate AgriDataValue data platform and Federated Machine Learning component offering two main business services to the users/citizen users:

- The ability to investigate the identity of an already trained AI model (what algorithm it is, what data it was trained on, what processing steps the data underwent, etc.).

- The possibility of being able to interpret a model’s decision on one or more data.

Conclusions

AI eXplainability is still object of a lively and active research that has produced, so far, many development tools and frameworks to address the difficult task of explaining the predictions of AI models, in particular those produced by Black-Box models. It is quickly becoming one of the main areas of development of the future of the AI systems. The research on XAI subject is still progressing and, in the meantime the existing methods and frameworks start being applied in application domain fields, including Agriculture, to comply with stronger and stronger interest of government bodies and Legislators. One point on which all seem to agree is that the AI of the future should conform to human values, ethical principles, and legal requirements so to ensure the privacy, security, and safety of the human users.

References

(1) “Proposal for a Regulation of the European Parliament and of the Council laying down harmonised rules on Artificial Intelligence (Artificial Intelligence Act) and amending certain Union legislative acts, COM/2021/206 final, in https://eur-lex.europa.eu/legal-content/EN/ALL/?uri=celex:52021PC0206.

(2) Benos et al., 2021 “Machine Learning in Agriculture: A Comprehensive Updated Review”, MDPI.

(3) Gouravmoy Bannerjee, Uditendu Sarkar et al., 2018 “Artificial Intelligence in Agriculture: A Literature Survey”, ISSN 2319 – 1953.

(4) Masahiro Ryo, 2022, “Explainable artificial intelligence and interpretable machine learning for agricultural data analysis”, KeAi Communications Co.